除了自己建模外,keras.applications 提供了數個預訓練的深度學習模型,包含常用的圖片分類模型 ResNet50、Vgg16/Vgg19 等,但是應用時可能會遇到需要調整輸入或輸出 layer 的狀況,最近練習時遇到案例輸入是 (128, 128, 3)、輸出是 2 個分類的,和預設的輸入 (244, 244, 3)、輸出 1000 類不同,在網路上找了一些抽換模型 layer 的方法,記錄下來

★ 引用套件

from keras.applications.resnet50 import ResNet50

★ 參數說明

keras.applications.resnet50.ResNet50(

include_top=True, # 是否包含最後的全連接層 (fully-connected layer)

weights='imagenet', # None: 權重隨機初始化、'imagenet': 載入預訓練權重

input_tensor=None, # 使用 Keras tensor 作為模型的輸入層(layers.Input() 輸出的 tensor)

input_shape=None, # 當 include_top=False 時,可調整輸入圖片的尺寸(長寬需不小於 32)

pooling=None, # 當 include_top=False 時,最後的輸出是否 pooling(可選 'avg' 或 'max')

classes=1000 # 當 include_top=True 且 weights=None 時,最後輸出的類別數

)

★ 原始 ResNet50 模型

resMod = ResNet50(include_top=True, weights='imagenet')

模型前 3 層

| Layer (type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| input_1 (InputLayer) | (None, 224, 224, 3) | 0 | |

| conv1_pad (ZeroPadding2D) | (None, 230, 230, 3) | 0 | input_1[0][0] |

| conv1 (Conv2D) | (None, 112, 112, 64) | 9472 | conv1_pad[0][0] |

模型最後 5 層

| Layer (type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| bn5c_branch2c (BatchNormalization) | (None, 7, 7, 2048) | 8192 | res5c_branch2c[0][0] |

| add_16 (Add) | (None, 7, 7, 2048) | 0 | bn5c_branch2c[0][0]、activation_46[0][0] |

| activation_49 (Activation) | (None, 7, 7, 2048) | 0 | add_16[0][0] |

| avg_pool (GlobalAveragePooling2) | (None, 2048) | 0 | activation_49[0][0] |

| fc1000 (Dense) | (None, 1000) | 2049000 | avg_pool[0][0] |

★ 不載入預訓練權重,直接更改輸入輸出層維度

num_classes = 2

input_shape = (128, 128, 3)

resMod = ResNet50(include_top=True, weights=None,

input_shape=input_shape, classes=num_classes)

得到的模型會是已經調整好輸入輸出維度的模型,但是權重需要重新 training

模型前 3 層

| Layer (type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| input_1 (InputLayer) | (None, 128, 128, 3) | 0 | |

| conv1_pad (ZeroPadding2D) | (None, 134, 134, 3) | 0 | input_1[0][0] |

| conv1 (Conv2D) | (None, 64, 64, 64) | 9472 | conv1_pad[0][0] |

模型最後 5 層

| Layer (type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| bn5c_branch2c (BatchNormalization) | (None, 4, 4, 2048) | 8192 | res5c_branch2c[0][0] |

| add_16 (Add) | (None, 4, 4, 2048) | 0 | bn5c_branch2c[0][0]、activation_46[0][0] |

| activation_49 (Activation) | (None, 4, 4, 2048) | 0 | add_16[0][0] |

| avg_pool (GlobalAveragePooling2) | (None, 2048) | 0 | activation_49[0][0] |

| fc1000 (Dense) | (None, 2) | 4098 | avg_pool[0][0] |

★ 載入權重,更改輸入圖片尺寸,輸出類別數手動修改

input_shape = (128, 128, 3)

resMod = ResNet50(include_top=False, weights='imagenet',

input_shape=input_shape)

模型前 3 層

| Layer (type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| input_1 (InputLayer) | (None, 128, 128, 3) | 0 | |

| conv1_pad (ZeroPadding2D) | (None, 134, 134, 3) | 0 | input_1[0][0] |

| conv1 (Conv2D) | (None, 64, 64, 64) | 9472 | conv1_pad[0][0] |

模型最後 3 層

| Layer (type) | Output Shape | Param # | Connected to |

|---|---|---|---|

| bn5c_branch2c (BatchNormalization) | (None, 4, 4, 2048) | 8192 | res5c_branch2c[0][0] |

| add_16 (Add) | (None, 4, 4, 2048) | 0 | bn5c_branch2c[0][0]、activation_46[0][0] |

| activation_49 (Activation) | (None, 4, 4, 2048) | 0 | add_16[0][0] |

在 pooling=None 時,原先的最後 2 層會被抽出;假如 pooling='avg' ,GlobalAveragePooling2 這層還會保留,只抽掉最後的全連接層;假如 pooling='max' ,GlobalAveragePooling2 這層會換成 Max Pooling,同時抽掉最後一層。

因為 include_top=False ,模型需要補上 output layer,例如:

from keras.layers import Dense, Dropout, Flatten

num_classes = 2

x = resMod.layers[-1].output

x = Flatten(name='flatten')(x)

x = Dropout(0.5)(x)

x = Dense(num_classes, activation='softmax', name='predictions')(x)

# Create your own model

model = keras.models.Model(input=resMod.input, output=x)

model.summary()

★ 手動修改輸入層

resMod = ResNet50(include_top=True, weights='imagenet')

# Pop out input layer

resMod.layers.pop(0)

# New input layer

newInput = keras.layers.Input(batch_shape=(None, 128, 128, 3))

newOutputs = resMod(newInput)

newModel = keras.models.Model(input=newInput, output=newOutputs)

newModel.summary()

模型結構

| Layer (type) | Output Shape | Param # |

|---|---|---|

| input_1 (InputLayer) | (None, 128, 128, 3) | 0 |

| resnet50 (Model) | multiple | 25636712 |

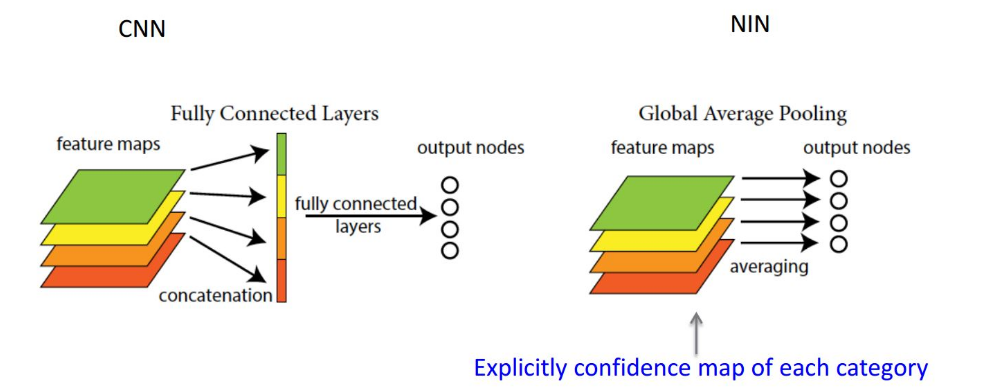

Flatten vs. GlobalPooling

Flatten 是將所有的 feature map 的所有 cell 轉成向量;GlobalPooling 則是每張 feature map 全部 cell 先經過 max 或 average pooling 後,再轉成向量,可以處理全連接層輸入維度太大、參數太多的問題

例如 activation_49 維度是 (None, 4, 4, 2048),如果下一層 Flatten,最後參數數目是 4 * 4 * 2048 = 32768 個;如果是 GlobalAveragePooling,參數就只有 2048 個。

<如有轉載,請附上本文連結網址>